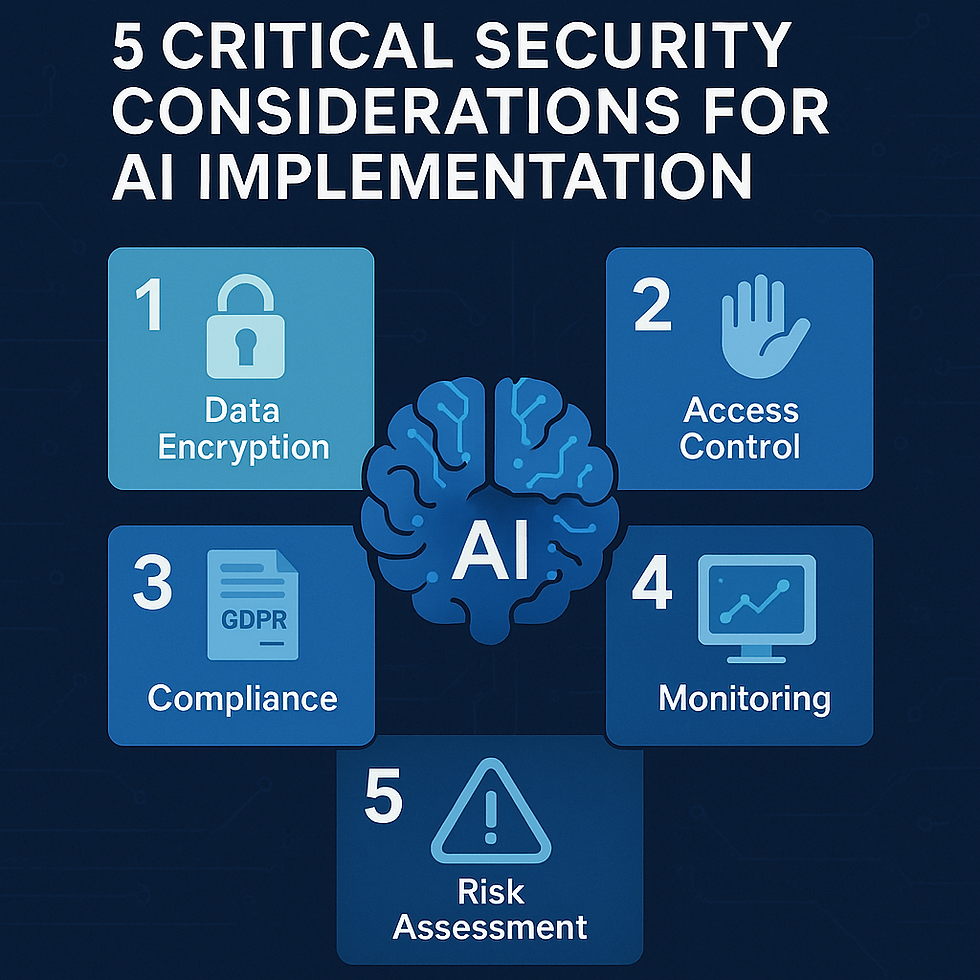

5 Critical Security Considerations for AI Implementation

- Jun 2, 2025

- 7 min read

Key Points

Securing data is crucial for AI systems, protecting against breaches and ensuring compliance.

Protecting AI models from attacks like adversarial manipulation is essential for reliable operation.

Securing the supply chains is critical, as third-party components can introduce vulnerabilities.

Access control and authentication are vital to prevent unauthorized access and insider threats.

Monitoring and incident response are necessary for detecting and addressing security issues promptly.

Introduction to AI Security

Artificial intelligence (AI) is transforming businesses by automating processes, enhancing decision-making, and driving innovation. However, as AI adoption grows, so do security risks. From data breaches to model manipulation, securing AI systems is critical to protect sensitive information, ensure compliance with regulations, and maintain customer trust. This article outlines five key security considerations for businesses implementing AI, drawing from authoritative sources to provide practical guidance.

Data Security: Safeguarding Sensitive Information

AI systems often process large volumes of sensitive data, making them prime targets for cyberattacks. Research suggests that securing this data is foundational, as breaches can lead to unauthorized access, compliance violations (e.g., GDPR or CCPA), and financial losses. To mitigate risks, businesses should implement end-to-end encryption, use strict access controls like role-based access management (RBAC), and conduct regular audits to ensure compliance with data protection laws.

Model Security: Protecting Against Attacks

AI models can be vulnerable to attacks such as adversarial attacks, where inputs are manipulated to deceive the model, or data poisoning, where training data is tampered with. It seems likely that protecting these models is essential for reliable operation, as compromised models can produce incorrect outputs or biased decisions. Best practices include using adversarial training, validating inputs, and regularly monitoring model performance to detect anomalies.

Supply Chain Security: Vetting Third-Party Components

Many AI systems rely on third-party components, such as pre-trained models or libraries, which can introduce vulnerabilities if not properly secured. The evidence leans toward the importance of supply chain security, as insecure components can compromise the entire system. Businesses should vet third-party providers, update dependencies regularly, and conduct security assessments to mitigate risks like backdoors or outdated software.

Access Control and Authentication: Limiting Unauthorized Access

Ensuring that only authorized personnel can access AI systems and data is likely vital to prevent insider threats and data leaks. Weak access controls can expose critical business information, making robust authentication mechanisms essential. Best practices include implementing multi-factor authentication (MFA), using RBAC to limit access, and regularly reviewing access logs for suspicious activity.

Monitoring and Incident Response: Detecting Threats in Real-Time

Continuous monitoring is necessary for detecting security threats promptly, as AI systems can be targeted by sophisticated attacks that may go unnoticed. It appears that having robust monitoring systems and incident response plans can minimize the impact of breaches. Businesses should implement logging and automated alerting, develop tailored incident response plans, and conduct regular security drills to ensure readiness.

Detailed Analysis of AI Security Considerations

This section provides a comprehensive exploration of the five critical security considerations for AI implementation, tailored for businesses seeking to integrate AI securely. Drawing from authoritative sources, we delve into the rationale, risks, and best practices, ensuring a thorough understanding for decision-makers and technical teams. The analysis is informed by recent insights from industry leaders and standards bodies, reflecting the state of AI security as of May 28, 2025.

Background and Context

As of 2025, AI adoption is accelerating across industries, driven by advancements in machine learning and generative AI. However, this growth has been accompanied by heightened security risks, as AI systems introduce dynamic, probabilistic attack surfaces. Unlike traditional IT security, AI security must address unique threats such as adversarial attacks, model inversion, and data poisoning. The need for robust security is underscored by regulatory frameworks like the EU AI Act and GDPR, which mandate risk assessments and data protection measures for high-risk AI systems.

To address these challenges, businesses must adopt a security-first approach, integrating safeguards throughout the AI lifecycle—from development to deployment. This survey note synthesizes insights from multiple sources, including the OWASP AI Security and Privacy Guide, the UK National Cyber Security Centre’s (NCSC) AI Cyber Security Code of Practice, and industry reports from Perception Point and Elastic. These sources provide a foundation for identifying the most critical security considerations, ensuring alignment with both technical and business needs.

Detailed Security Considerations

The following table summarizes the five critical security considerations, their associated risks, and recommended best practices, based on the analysis of authoritative sources:

Consideration | Description | Key Risks | Best Practices |

Data Security | Protecting sensitive data used by AI systems from breaches and unauthorized access. | Data breaches, compliance violations, data leaks. | Implement encryption, access controls, regular audits, comply with GDPR/CCPA. |

Model Security | Safeguarding AI models from attacks like adversarial manipulation and data poisoning. | Incorrect outputs, biased decisions, model theft. | Use adversarial training, input validation, monitor performance, regular updates. |

Supply Chain Security | Securing third-party components like pre-trained models and libraries. | Insecure components, backdoors, outdated dependencies. | Vet providers, update dependencies, conduct security assessments. |

Access Control and Authentication | Ensuring only authorized personnel can access AI systems and data. | Unauthorized access, insider threats, data leaks. | Implement MFA, RBAC, review access logs, train employees on secure practices. |

Monitoring and Incident Response | Detecting and responding to security threats in real-time. | Undetected breaches, delayed response, prolonged exposure. | Use logging, automated alerting, develop incident response plans, conduct drills. |

Each consideration is explored in detail below, with additional context and examples to illustrate their importance.

1. Data Security

AI systems often handle large volumes of sensitive information, such as customer data, financial records, or proprietary business insights. The OWASP AI Security and Privacy Guide highlights that data breaches are a significant concern, as compromised data storage or transmission channels can lead to unauthorized access, violating privacy laws and causing reputational damage. Perception Point’s analysis further emphasizes the need for robust encryption and secure communication protocols to mitigate these risks.

For businesses, ensuring data security involves implementing end-to-end encryption, enforcing strict access controls, and conducting regular audits to comply with regulations like GDPR and CCPA. Techniques such as data anonymization and differential privacy can also enhance privacy, particularly for AI systems processing personal data. This consideration is critical for maintaining customer trust and avoiding legal penalties, making it a top priority for AI implementation.

2. Model Security

AI models are the core of any AI system, but they are vulnerable to attacks that can compromise their integrity. The Elastic Blog discusses threats like adversarial attacks, where attackers manipulate inputs to deceive the model, and data poisoning, where training data is tampered with to alter model behavior. These attacks can lead to incorrect outputs, biased decisions, or even intellectual property theft, as noted in the 13 Key Principles for Securing AI Systems from Medium.

To protect models, businesses should use adversarial training to make models more robust, validate and sanitize inputs to prevent malicious data, and regularly monitor performance for anomalies. The Perception Point article also recommends cryptographic checks and regular retraining to ensure model integrity, particularly against emerging threats like model inversion and extraction. This consideration is essential for ensuring AI systems operate as intended, especially in high-stakes applications like healthcare or finance.

3. Supply Chain Security

Many AI systems rely on third-party components, such as pre-trained models, libraries, or APIs, which can introduce vulnerabilities if not properly secured. The Medium article’s Principle 3, “Protect AI Supply Chains,” underscores the importance of evaluating these components for risks, as insecure libraries or models can compromise the entire system. For example, a malicious pre-trained model could contain backdoors that attackers exploit, as highlighted in the OWASP guide.

Businesses should vet third-party providers, regularly update dependencies to patch known vulnerabilities, and conduct security assessments to identify risks. Maintaining a clear inventory of all components used in AI systems can also help track potential vulnerabilities, ensuring a secure supply chain. This consideration is particularly relevant for enterprises leveraging cloud-based AI services or open-source libraries, where supply chain risks are prevalent.

4. Access Control and Authentication

Ensuring that only authorized personnel can access AI systems and data is crucial for preventing insider threats and unauthorized access. The OWASP guide emphasizes the importance of robust identity and access management (IAM), including multi-factor authentication (MFA) and role-based access control (RBAC). Weak access controls can lead to data leaks or misuse, especially in environments where employees have broad access to sensitive AI systems.

Best practices include implementing MFA for all users, using RBAC to limit access to only what is necessary, and regularly reviewing access logs for suspicious activity. Training employees on secure access practices can also reduce the risk of accidental breaches. This consideration is vital for businesses handling critical data, ensuring that access is tightly controlled to minimize exposure.

5. Monitoring and Incident Response

Continuous monitoring is essential for detecting security threats in real-time, as AI systems can be targeted by sophisticated attacks that may not be immediately apparent. The Perception Point article recommends implementing telemetry, logging, and automated alerting to track system behavior and flag anomalies. The Elastic Blog also highlights the importance of anomaly detection systems to identify unusual patterns in data access, enabling timely intervention.

Businesses should develop incident response plans tailored for AI systems, outlining procedures for detection, response, and recovery in case of attacks like data poisoning or model corruption. Regular security drills can ensure teams are prepared, minimizing the impact of breaches. This consideration is critical for maintaining operational continuity and responding effectively to emerging threats, particularly as AI systems become more integrated into business operations.

Additional Insights and Emerging Trends

The analysis also revealed emerging trends, such as the growing importance of generative AI security. Perception Point’s article lists new risks like sophisticated phishing attacks, direct prompt injections, and LLM privacy leaks, which are particularly relevant for businesses adopting generative AI tools. While these are not included in the top five considerations due to the broader focus on AI implementation, they highlight the evolving nature of AI security risks.

Regulatory frameworks, such as the EU AI Act, also play a significant role, classifying AI systems into risk levels (e.g., unacceptable, high, limited) and mandating security risk assessments for high-risk applications. The OWASP guide and NIST AI Risk Management Framework provide additional guidance, emphasizing security by design and lifecycle management. These standards reinforce the need for a proactive, comprehensive approach to AI security, aligning with the considerations outlined above.

Practical Implications for Businesses

For businesses implementing AI, these considerations are not just technical requirements but strategic imperatives. Prioritizing security can protect against financial losses, legal penalties, and reputational damage, while also building customer trust. Consulting with AI security experts can provide tailored guidance, ensuring that implementations are both innovative and secure. As of May 28, 2025, the landscape continues to evolve, with new threats and regulations shaping the future of AI security.

Key Citations

Comments